This article explores the shift from fast-burning viral controversies to “slow rumble” crises, which are issues that build over months through decentralized, repetitive content amplified by AI.

Using examples from Pizzagate to Tim Hortons, it shows how micro-filter bubbles and AI-generated personas like “Josh” can entrench damaging narratives beyond the reach of traditional corporate responses.

You’ll learn why monitoring early signals, mapping narratives, and using AI-driven audience intelligence are now critical to defending your brand before the damage is irreversible.

It’s wild to think that in just the past three weeks we have seen two very different moments dominate the internet, both surging to the top of the feed, dominating attention, and then disappearing almost as quickly.

At a Coldplay concert, a kiss cam caught the CEO and head of HR from the same company in what turned out to be an extramarital affair. The clip raced across TikTok, Reddit, LinkedIn, and broadcast news, topping 130 million views and an estimated reach of more than 1.4 billion.

Days later, American Eagle faced its own wave of attention when a Sydney Sweeney campaign sparked calls for boycotts.

But while we’re all reflecting on eugenics or Gwyneth Paltrow’s brief cameo as Astronomer’s spokesperson, we’re being led astray.

The future of crisis and issues management is less about the flash in the pan. Instead, we’re entering a very different information and issues management environment, one shaped by Generative AI content, algorithmic distribution, and increasingly smaller filter bubbles.

Crises now develop quietly in the background. They spread gradually and repetitively through hundreds of pieces of content shared by people with little direct influence. But collectively, these low-grade signals act like digital water torture, chipping away at brand perception, eroding trust, and requiring new systems to track and new tactics to respond.

The foundation for this kind of decentralized, “slow-burn” crisis were laid years ago, all the way back to the 2016 US Election. Many readers will be familiar with the Pizzagate conspiracy. It emerged in late 2016 as a crowdsourced investigation into Democratic Party emails released by WikiLeaks. What began as document analysis evolved into a sophisticated, persistent, community-driven narrative about the Democratic Party, a pizza restaurant in Washington, and Hillary Clinton.

Pizzagate, which was followed later by QAnon, created a new blueprint for crisis and issue management. The core elements of which include:

Unlike traditional crisis, this new blueprint is the basis for the new era of issues management. It creates a "slow rumble" crisis that builds over months or years. Slowly, this content and these groups, through pure frequency and repetition, create new perceptions in the minds of the public, making them resistant to conventional corporate response strategies.

Add a few new ingredients to the mix, and you have a powerful accelerate to this “slow rumble” pattern.

Back in April, we published a story on what we are calling, the era of Infinite Content, a shift where users of AI models like GPT-5, Sora, Veo 3, etc., are flooding platforms with content created at near-zero cost and near-instant speed. What once required weeks or months of lead time, can now be generated in minutes. A single prompt can create dozens of synthetic images, videos, or opinion posts that look real enough to go unquestioned and can be deployed at scale by anyone with an agenda.

The second ingredient, still emerging, is micro-filter bubbles.

Recommendation algorithms on social platforms are designed to keep people engaged for as long as possible. They learn what holds attention, what triggers reaction, and what keeps someone coming back, then serve up a steady flow of similar content. Over time, this creates self-reinforcing information environments where the same ideas, themes, and narratives are repeated.

Up until now, those bubbles have been relatively broad. This was a function of technical and operational limits, where there simply wasn’t enough processing power or infrastructure to target at an individual level.

Generative AI changes that. Now, it’s possible to produce and deliver narratives tailored to increasingly narrow segments at a massive scale. Now, not only can you group people into a longer tail of behavioural clusters based on shared interests, but you can also serve them the content. Within these micro-filter bubbles, information doesn’t have to be true to be trusted, it only needs to align with what has already been seen and believed. Once those beliefs harden, they are extraordinarily resistant to outside correction, making it harder for brands to break through with facts, context, or alternative perspectives.

The result is going to be the proliferation of micro-filter bubbles: hyper-personalized environments, like bespoke WhatsApp groups, Telegram threads, niche forum channels.

This blueprint for “slow rumble”, with the added elements enabled by Generative AI, has now evolved beyond the political theatre to corporate reputational attacks.

One of Canada’s most iconic brands, Tim Hortons, isn’t just a coffee chain, it’s part of the country’s cultural identity. But beneath that carefully cultivated image, a very different story was taking shape, one that shows how the Pizzagate blueprint and Generative AI are converging to reshape crisis and issue management.

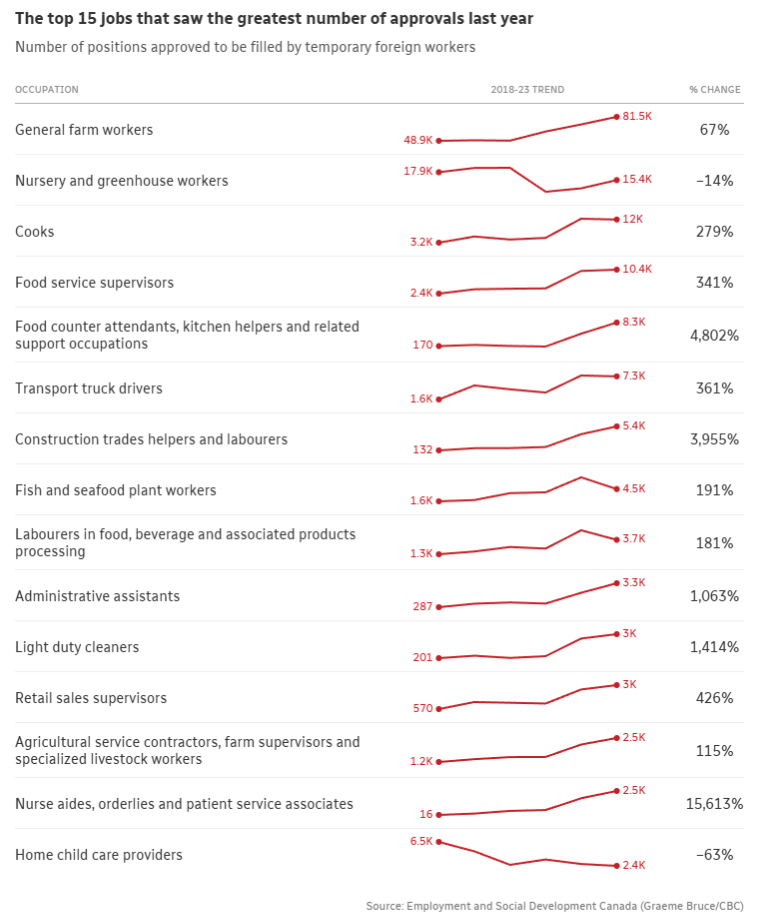

It began with Canada’s Temporary Foreign Worker (TFW) Program, which allows employers to hire foreign workers when no Canadian workers are available. In Ontario, Tim Hortons’ use of TFWs jumped from 58 in 2019 to 714 in 2023, a 1,131% increase. Across Canada’s food service sector, TFW positions for counter and kitchen roles grew from 170 in 2021 to 8,300 in 2023, a 4,000% increase.

The impact was immediate and visible in communities across the country. Parents watched as their teenagers, who had traditionally found first jobs at Tim Hortons, McDonald's, and other fast-food chains, suddenly couldn't get hired anywhere. Reddit threads dissected government Labour Market Impact Assessment (LMIA) data, traced the creation of numbered companies to hire TFWs, alleged cash-for-job schemes costing up to $100,000, and accused Tim Horton’s of betraying Canadian values. While many posts contained xenophobic or racist language, the existence of those comments did not erase the core reputational challenge Tim Hortons was facing around hiring practices, economic impact, and brand identity. “It’s not even Canadian-owned,” users repeated, pointing to the foreign private equity ownership of the company.

By 2023, the brand damage was evident. A conversation thread that started in forum groups was now an accepted reality by many. On multiple platforms, criticism gave way to calls for a boycott and brand switching. A subreddit was launched to boycott the company r/BoycottTimHortons/ bringing people together from across the political spectrum, from those concerned about bread-and-butter economic issues to those worried about worker exploitation.

This conversation wasn’t confined to Reddit. It spread to Facebook, TikTok, YouTube, and beyond. Like Pizzagate, it was built off the emerging pattern we outlined above:

In the summer of 2025, a new voice entered the conversation. A young white man named “Josh” appeared on TikTok, venting his frustration about not being able to get a job at Tim Hortons because he didn’t speak Punjabi. His tone was casual, his grievances familiar, and his story build on the slow-burn narrative that had become an entrenched part of the brand conversation.

He was an AI-generated persona, created by Nexa, a recruiting software company, using tools like Google’s Veo 3 video generation platform. The videos, complete with natural-sounding audio, emotional inflection, and relatable backdrops, were designed to feel authentic. And for many viewers, they did.

Over several months, the account (@unemployedflex) posted 30–40 videos. None went viral individually, but collectively they reached between 500,000 and 1 million views. The top video hit 200,000 views, while others landed between 16,000 and 58,000. With only 376 followers, the account’s reach came from algorithmic targeting and reinforcement inside filter bubbles, not broad popularity.

The community reaction to Josh's videos revealed how many of the views expressed in the videos were entrenched and connected to the brand. Many viewers shared similar job-hunting frustrations or stories of their children being unable to find work at Tim Hortons. Others used the comments to criticize the brand’s hiring practices and quality. Josh even replied to critics, claiming, “I am not racist. Just shining comedy on the job market in Toronto,” maintaining the illusion of a real person engaging in real time. This created the illusion of genuine human interaction while actually being a response designed to maintain the persona's credibility.

Since TikTok removed the account, similar AI-generated personas have surfaced, recycling the same themes, grievances, and tactics.

Up to now, Tim Horton's response to the TFW issues and Josh AI videos has been minimal. The company has issued selective statements only when directly confronted by media stories, describing the AI-generated videos as "extremely frustrating and concerning" and noting they have had "difficulty getting them taken down".

It's hard to tell if these issues have had any financial impact on Tim Hortons performance. The last few quarterly results have not been stella and it is hard to draw a direct line without further research of analysis. Regardless, it is fair to say these issues have cause reputational damage to the brand which as yet to be addressed.

We’re entering a period where this “slow rumble” patterns will become the dominant mode of reputation and issues management.

These are steady, repetitive, and often invisible until the damage is done. Misinformation now spreads in small bursts, through comment threads, forum posts, group chats, and AI-generated content that blends seamlessly into what people are already consuming.

Most companies aren’t built for this. Traditional frameworks expect a spike, a clear trigger, and a short escalation window. Instead, brands are facing the cumulative effect of content that erodes trust over time.

To adapt, communications leaders need to rethink how they use AI to monitor, engage, and respond:

Reputation today is more vulnerable to slow erosion than sudden collapse. The loudest threats may still grab headlines, but the more dangerous ones unfold in the background, fed by repetition, falsehoods, and frictionless distribution.

The economic asymmetry is stark. Traditional crisis response relies on expensive, high-intensity interventions. Adversaries now can generate and circulate AI-driven narratives at scale for almost nothing, sustaining them for months with minimal effort. The cost to attack has collapsed.

Communications leaders need to evolve their playbooks to match this new reality and act accordingly. Somewhere, right now, slow rumblings about your brand are already eroding your reputation. Do you know what they are?